https://www.rdoproject.org/networking/networking-in-too-much-detail/

https://blogs.oracle.com/ronen/entry/diving_into_openstack_network_architecture1

https://www.gitbook.com/book/yeasy/openstack_understand_neutron/details

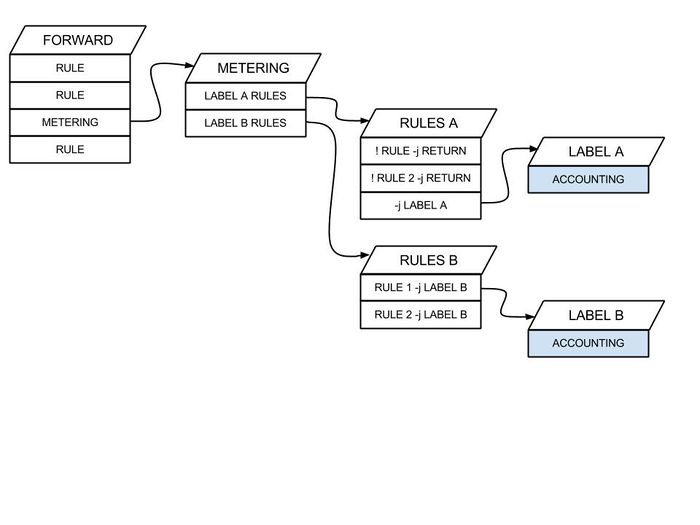

This picture is quite important because it tells the neutron networking architecture clearly.

So, I have a VM/instance, which is "

>

+--------------------------------------+-------------------------------------------------------+--------+------------+-------------+--------------------------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-------------------------------------------------------+--------+------------+-------------+--------------------------------------------+

| aa2f621c-65e0-4d89-bb6d-c66054ee9250 | mi--lbod6yoctnm3-0-jbcgnagn4dck-instance-xvti2docwkl4 | ACTIVE | - | Running | default_network=192.168.100.1, 10.14.1.154 |

+--------------------------------------+-------------------------------------------------------+--------+------------+-------------+--------------------------------------------+

The instance id is aa2f621c-65e0-4d89-bb6d-c66054ee9250

root@node-6

+--------------------------------------+---------------------------------------------------------------+

| Property | Value |

+--------------------------------------+---------------------------------------------------------------+

| OS-DCF

| OS-EXT-AZ

| OS-EXT-SRV-ATTR

| OS-EXT-SRV-ATTR

| OS-EXT-SRV-ATTR

| OS-EXT-STS

| OS-EXT-STS

| OS-EXT-STS

| OS-SRV-USG:launched_at | 2016-01-25T06:17:13.000000 |

| OS-SRV-USG

| accessIPv4 | |

| accessIPv6 | |

| config_drive | |

|

| default_network network | 192.168.100.1, 10.14.1.154 |

|

|

| id | aa2f621c-65e0-4d89-bb6d-c66054ee9250 |

|

| key_name | 2d8ed97974a34782ba3c1eda2cc1f705 |

|

|

|

|

| security_groups | default_sg |

|

| tenant_id | fc48558ea8684d14a1da30f6c5028064 |

|

| user_id | 2d8ed97974a34782ba3c1eda2cc1f705 |

+--------------------------------------+---------------------------------------------------------------+

$ nova-manage

mi--lbod6yoctnm3-0-jbcgnagn4dck-instance-xvti2docwkl4 node-5.domain.tld 1core2GBmemory20GBdisk active 2016-01-25 06:17:13 0d95c5a8-ea4e-45a4-ba79-6b1c8f2acf46 fc48558ea8684d14a1da30f6c5028064 2d8ed97974a34782ba3c1eda2cc1f705 nova 0

From now on, we can go through the neutron networking on Compute and Neutron/Service host

Compute Node:

Find the related interface and bridge that belongs to our VM

$ root@node-5

<

$ root@node-5:/var/lib/nova/instances/aa2f621c-65e0-4d89-bb6d-c66054ee9250# iptables -S | grep tape7b56cc3-8f

-A neutron-

-A neutron-

-A neutron-

-A neutron-

-A neutron-

Apply the security group

$ root@node-5:/var/lib/nova/instances/aa2f621c-65e0-4d89-bb6d-c66054ee9250# iptables -L neutron-openvswi-sg-chain | grep tape7b56cc3-8f

root@node-5

....

qbre7b56cc3-8f 8000.1ab45f3f1100 no qvbe7b56cc3-8f

tape7b56cc3-8f

....

$

....

Port "qvoe7b56cc3-8f"

Interface "qvoe7b56cc3-8f"

Bridge

Port

Interface

Port "p_br-prv-0"

Interface "p_br-prv-0"

Port

Interface

Bridge

Port "p_br-floating-0"

Interface "p_br-floating-0"

Port

Interface

ovs_version: "2.3.1"

or here is another approach:

Use virsh command:

# virsh list

# virsh domiflist <your instance>

Find the bridge that VM's interface is connected

# brctl show qbr10257204-b0

Find veth pairs

# ethtool -S qvb10257204-b0

# ip link list |grep ‘41: ‘

OVS flow tables

$ root@node-5:/var/lib/nova/instances/aa2f621c-65e0-4d89-bb6d-c66054ee9250# ovs-ofctl dump-flows br-floatingNXST_FLOW reply (xid=0x4):

cookie=0x0, duration=2944114

$ root@node-5:/var/lib/nova/instances/aa2f621c-65e0-4d89-bb6d-c66054ee9250# ovs-ofctl dump-flows br-prv

NXST_FLOW reply (xid=0x4):

cookie=0x0, duration=2943385

cookie=0x0, duration=1212275.443s, table=0, n_packets=119001, n_bytes=9280541, idle_age=1, hard_age=65534, priority=4,in_port=2,dl_vlan=53 actions=mod_vlan_vid:289,NORMAL

cookie=0x0, duration=2939213.555s, table=0, n_packets=40203246, n_bytes=7594046533, idle_age=0, hard_age=65534, priority=4,in_port=2,dl_vlan=1 actions=mod_vlan_vid:400,NORMAL

cookie=0x0, duration=443296.428s, table=0, n_packets=17855, n_bytes=1651227, idle_age=27, hard_age=65534, priority=4,in_port=2,dl_vlan=78 actions=mod_vlan_vid:388,NORMAL

cookie=0x0, duration=440574.253s, table=0, n_packets=90232, n_bytes=6655951, idle_age=14, hard_age=65534, priority=4,in_port=2,dl_vlan=81 actions=mod_vlan_vid:361,NORMAL

cookie=0x0, duration=430464.292s, table=0, n_packets=97628, n_bytes=7077738, idle_age=1, hard_age=65534, priority=4,in_port=2,dl_vlan=87 actions=mod_vlan_vid:309,NORMAL

cookie=0x0, duration=286613.370s, table=0, n_packets=74230, n_bytes=5428601, idle_age=1, hard_age=65534, priority=4,in_port=2,dl_vlan=91 actions=mod_vlan_vid:321,NORMAL

cookie=0x0, duration=188.222s, table=0, n_packets=276, n_bytes=25628, idle_age=0, priority=4,in_port=2,dl_vlan=97 actions=mod_vlan_vid:374,NORMAL

cookie=0x0, duration=8355.066s, table=0, n_packets=2228, n_bytes=178873, idle_age=85, priority=4,in_port=2,dl_vlan=93 actions=mod_vlan_vid:255,NORMAL

cookie=0x0, duration=2869178.234s, table=0, n_packets=1063994, n_bytes=83782069, idle_age=19, hard_age=65534, priority=4,in_port=2,dl_vlan=2 actions=mod_vlan_vid:206,NORMAL

cookie=0x0, duration=1148558.787s, table=0, n_packets=41929, n_bytes=4005468, idle_age=14, hard_age=65534, priority=4,in_port=2,dl_vlan=55 actions=mod_vlan_vid:295,NORMAL

cookie=0x0, duration=436450.974s, table=0, n_packets=268258, n_bytes=26273333, idle_age=0, hard_age=65534, priority=4,in_port=2,dl_vlan=86 actions=mod_vlan_vid:305,NORMAL

cookie=0x0, duration=1134136.903s, table=0, n_packets=41386, n_bytes=3955238, idle_age=107, hard_age=65534, priority=4,in_port=2,dl_vlan=57 actions=mod_vlan_vid:274,NORMAL

cookie=0x0, duration=349629.927s, table=0, n_packets=12556, n_bytes=1235546, idle_age=4, hard_age=65534, priority=4,in_port=2,dl_vlan=88 actions=mod_vlan_vid:325,NORMAL

cookie=0x0, duration=956159.621s, table=0, n_packets=1414006, n_bytes=2522828496, idle_age=6, hard_age=65534, priority=4,in_port=2,dl_vlan=68 actions=mod_vlan_vid:383,NORMAL

cookie=0x0, duration=444184.489s, table=0, n_packets=338, n_bytes=37368, idle_age=65534, hard_age=65534, priority=4,in_port=2,dl_vlan=76 actions=mod_vlan_vid:212,NORMAL

cookie=0x0, duration=341208.593s, table=0, n_packets=4535, n_bytes=806468, idle_age=104, hard_age=65534, priority=4,in_port=2,dl_vlan=89 actions=mod_vlan_vid:307,NORMAL

cookie=0x0, duration=1046690.998s, table=0, n_packets=341, n_bytes=37606, idle_age=65534, hard_age=65534, priority=4,in_port=2,dl_vlan=63 actions=mod_vlan_vid:247,NORMAL

cookie=0x0, duration=1469822.661s, table=0, n_packets=54812, n_bytes=5159092, idle_age=83, hard_age=65534, priority=4,in_port=2,dl_vlan=47 actions=mod_vlan_vid:324,NORMAL

cookie=0x0, duration=609252.595s, table=0, n_packets=473, n_bytes=403134, idle_age=65534, hard_age=65534, priority=4,in_port=2,dl_vlan=72 actions=mod_vlan_vid:277,NORMAL

cookie=0x0, duration=2943385

$ root@node-5:/var/lib/nova/instances/aa2f621c-65e0-4d89-bb6d-c66054ee9250# ovs-ofctl dump-flows br-int

NXST_FLOW reply (xid=0x4):

cookie=0x0, duration=2943396

cookie=0x0, duration=1134146.751s, table=0, n_packets=51173, n_bytes=39495387, idle_age=1, hard_age=65534, priority=3,in_port=1,dl_vlan=274 actions=mod_vlan_vid:57,NORMAL

cookie=0x0, duration=443306.272s, table=0, n_packets=22067, n_bytes=18326623, idle_age=37, hard_age=65534, priority=3,in_port=1,dl_vlan=388 actions=mod_vlan_vid:78,NORMAL

cookie=0x0, duration=1046700.841s, table=0, n_packets=244, n_bytes=37459, idle_age=65534, hard_age=65534, priority=3,in_port=1,dl_vlan=247 actions=mod_vlan_vid:63,NORMAL

cookie=0x0, duration=8364.913s, table=0, n_packets=2939, n_bytes=3336868, idle_age=9, priority=3,in_port=1,dl_vlan=255 actions=mod_vlan_vid:93,NORMAL

cookie=0x0, duration=2869188.084s, table=0, n_packets=1110068, n_bytes=287840569, idle_age=1, hard_age=65534, priority=3,in_port=1,dl_vlan=206 actions=mod_vlan_vid:2,NORMAL

cookie=0x0, duration=1148568.632s, table=0, n_packets=51652, n_bytes=39880915, idle_age=24, hard_age=65534, priority=3,in_port=1,dl_vlan=295 actions=mod_vlan_vid:55,NORMAL

cookie=0x0, duration=609262.437s, table=0, n_packets=4995, n_bytes=345118, idle_age=14, hard_age=65534, priority=3,in_port=1,dl_vlan=277 actions=mod_vlan_vid:72,NORMAL

cookie=0x0, duration=1212285.287s, table=0, n_packets=127910, n_bytes=47848009, idle_age=5, hard_age=65534, priority=3,in_port=1,dl_vlan=289 actions=mod_vlan_vid:53,NORMAL

cookie=0x0, duration=1469832.507s, table=0, n_packets=66919, n_bytes=50958284, idle_age=93, hard_age=65534, priority=3,in_port=1,dl_vlan=324 actions=mod_vlan_vid:47,NORMAL

cookie=0x0, duration=430474.139s, table=0, n_packets=101531, n_bytes=22514877, idle_age=8, hard_age=65534, priority=3,in_port=1,dl_vlan=309 actions=mod_vlan_vid:87,NORMAL

cookie=0x0, duration=2939223.400s, table=0, n_packets=39197886, n_bytes=15878291311, idle_age=1, hard_age=65534, priority=3,in_port=1,dl_vlan=400 actions=mod_vlan_vid:1,NORMAL

cookie=0x0, duration=440584.097s, table=0, n_packets=94708, n_bytes=24882334, idle_age=1, hard_age=65534, priority=3,in_port=1,dl_vlan=361 actions=mod_vlan_vid:81,NORMAL

cookie=0x0, duration=349639.771s, table=0, n_packets=15123, n_bytes=10862299, idle_age=14, hard_age=65534, priority=3,in_port=1,dl_vlan=325 actions=mod_vlan_vid:88,NORMAL

cookie=0x0, duration=341218.441s, table=0, n_packets=4854, n_bytes=1123080, idle_age=113, hard_age=65534, priority=3,in_port=1,dl_vlan=307 actions=mod_vlan_vid:89,NORMAL

cookie=0x0, duration=956169.465s, table=0, n_packets=1976310, n_bytes=195788064, idle_age=2, hard_age=65534, priority=3,in_port=1,dl_vlan=383 actions=mod_vlan_vid:68,NORMAL

cookie=0x0, duration=444194.333s, table=0, n_packets=251, n_bytes=37638, idle_age=65534, hard_age=65534, priority=3,in_port=1,dl_vlan=212 actions=mod_vlan_vid:76,NORMAL

cookie=0x0, duration=436460.818s, table=0, n_packets=448207, n_bytes=582425969, idle_age=0, hard_age=65534, priority=3,in_port=1,dl_vlan=305 actions=mod_vlan_vid:86,NORMAL

cookie=0x0, duration=198.065s, table=0, n_packets=71, n_bytes=13149, idle_age=4, priority=3,in_port=1,dl_vlan=374 actions=mod_vlan_vid:97,NORMAL

cookie=0x0, duration=286623.215s, table=0, n_packets=76707, n_bytes=17851968, idle_age=6, hard_age=65534, priority=3,in_port=1,dl_vlan=321 actions=mod_vlan_vid:91,NORMAL

cookie=0x0, duration=2943395

cookie=0x0, duration=2943396

VLAN Translation

root@node-5cookie=0x0, duration=97049.752s, table=0, n_packets=7155, n_bytes=6796405, idle_age=61, hard_age=65534, priority=3,in_port=1,dl_vlan=255 actions=mod_vlan_vid:93,NORMAL

From the external vlan (segmentation id ) 255 to internal vlan 93

root@node-5

cookie=0x0, duration=97125.859s, table=0, n_packets=5278, n_bytes=475415, idle_age=40, hard_age=65534, priority=4,in_port=2,dl_vlan=93 actions=mod_vlan_vid:255,NORMAL

From the internal vlan 93 to external vlan (segmentation id ) 255

P.S: how to get

Use command to show of-port number:

ovs-ofctl show <ovs-bridge>

Use command to show vlan-tag on port:

ovs-vsctl show

Network Node:

Router List

root@node-6: ~# neutron --OS-tenant-name Danny router-list+--------------------------------------+-------------------------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|

+--------------------------------------+-------------------------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| 6a222d9d-71da-4db6-891b-87d4b6ee8536 | default_network-admin-router-rcg7xvxhll2i | {"network_id": "7cd5fc6c-e47a-420c-8d15-aa51747564d8", "enable_snat": true, "external_fixed_ips": [{"subnet_id": "8e2aa50c-cd0e-4596-97fb-dfc1ecc63245", "ip_address": "10.14.1.153"}]} |

+--------------------------------------+-------------------------------------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

root@node-6:~# ip net exec qrouter-6a222d9d-71da-4db6-891b-87d4b6ee8536 ip route

192.168.100.0/24 dev

root@node-6:~# ip net exec qrouter-6a222d9d-71da-4db6-891b-87d4b6ee8536 iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N neutron-l3-agent-OUTPUT

-N neutron-l3-agent-POSTROUTING

-N neutron-l3-agent-PREROUTING

-N neutron-l3-agent-float-

-N neutron-l3-agent-

-N neutron-

-A PREROUTING -j neutron-l3-agent-PREROUTING

-A OUTPUT -j neutron-l3-agent-OUTPUT

-A POSTROUTING -j neutron-l3-agent-POSTROUTING

-A POSTROUTING -j neutron-

-A neutron-l3-agent-OUTPUT -d 10.14.1.154/32 -j DNAT --to-destination 192.168.100.1

-A neutron-l3-agent-POSTROUTING

-A neutron-l3-agent-PREROUTING -d 169.254.169.254/32 -p tcp -m tcp --

-A neutron-l3-agent-PREROUTING -d 10.14.1.154/32 -j DNAT --to-destination 192.168.100.1

-A neutron-l3-agent-float-

-A neutron-l3-agent-

-A neutron-l3-agent-

-A neutron-

P.S: The VM's floating IP is 10.14.1.154

Network List

root@node-6+--------------------------------------+-----------------+-------------------------------------------------------+

|

+--------------------------------------+-----------------+-------------------------------------------------------+

| 16b6d042-ce8b-4020-82e0-86a6829a3978 | default_network | 7d5eafc1-2c10-410d-a8e0-1dc648969fcd 192.168.100.0/24 |

| 7cd5fc6c-e47a-420c-8d15-aa51747564d8 | net04_ext | 8e2aa50c-cd0e-4596-97fb-dfc1ecc63245 |

+--------------------------------------+-----------------+-------------------------------------------------------+

Responsible network node for DHCP and L3

You can obtain this now that you’ve got the network’s UUID by doing the following:root@node-6

+--------------------------------------+-------------------+----------------+-------+

|

+--------------------------------------+-------------------+----------------+-------+

| 34263976-2f1b-48bd-a40e-eb6f0e77c5f4 | node-6

+--------------------------------------+-------------------+----------------+-------+

To find the vlan

root@node-6+--------------------------+-------+

| Field | Value |

+--------------------------+-------+

|

+--------------------------+-------+

VLAN Translation

root@node-6cookie=0x0, duration=97539.474s, table=0, n_packets=7188, n_bytes=6770236, idle_age=65, hard_age=65534, priority=4,in_port=2,dl_vlan=330 actions=mod_vlan_vid:255,NORMAL

root@node-6

cookie=0x0, duration=97562.780s, table=0, n_packets=5264, n_bytes=516743, idle_age=89, hard_age=65534, priority=3,in_port=1,dl_vlan=255 actions=mod_vlan_vid:330,NORMAL

To find the dhcp namesapce

root@node-6:~# ip netns | grep 16b6d042-ce8b-4020-82e0-86a6829a3978qdhcp-16b6d042-ce8b-4020-82e0-86a6829a3978

root@node-6:~# ps aux | grep 16b6d042-ce8b-4020-82e0-86a6829a3978

nobody 11958 0.0 0.0 28204 1048 ? S Jan25 0:00 dnsmasq --no-hosts --no-resolv --strict-order --bind-interfaces --interface=tap74d4769b-5b --except-interface=lo --pid-file=/var/lib/neutron/dhcp/16b6d042-ce8b-4020-82e0-86a6829a3978/pid --dhcp-hostsfile=/var/lib/neutron/dhcp/16b6d042-ce8b-4020-82e0-86a6829a3978/host --addn-hosts=/var/lib/neutron/dhcp/16b6d042-ce8b-4020-82e0-86a6829a3978/addn_hosts --dhcp-optsfile=/var/lib/neutron/dhcp/16b6d042-ce8b-4020-82e0-86a6829a3978/opts --dhcp-leasefile=/var/lib/neutron/dhcp/16b6d042-ce8b-4020-82e0-86a6829a3978/leases --dhcp-range=set:tag0,192.168.100.0,static,600s --dhcp-lease-max=256 --conf-file=/etc/neutron/dnsmasq-neutron.conf --domain=openstacklocal

Subnet List

root@node-6+--------------------------------------+---------------------------------------------------+------------------+------------------------------------------------------+

|

+--------------------------------------+---------------------------------------------------+------------------+------------------------------------------------------+

| 7d5eafc1-2c10-410d-a8e0-1dc648969fcd | default_network-admin-private_subnet-kvrtht6fwqhr | 192.168.100.0/24 | {"start": "192.168.100.1", "end": "192.168.100.253"} |

+--------------------------------------+---------------------------------------------------+------------------+------------------------------------------------------+

Port List

root@node-6+--------------------------------------+------+-------------------+----------------------------------------------------------------------------------------+

|

+--------------------------------------+------+-------------------+----------------------------------------------------------------------------------------+

| 2d7bdc99-9053-448f-9f4a-e71ba8450ac2 | | fa:16:3e:da:12:25 | {"subnet_id": "7d5eafc1-2c10-410d-a8e0-1dc648969fcd", "ip_address": "192.168.100.254"} |

| 74d4769b-5b7e-4523-ba34-64672d4ac8f1 | | fa:16:3e:90:bd:88 | {"subnet_id": "7d5eafc1-2c10-410d-a8e0-1dc648969fcd", "ip_address": "192.168.100.2"} |

| e7b56cc3-8fcd-4a8b-bd00-79b7a625acdc | | fa:16:3e:d4:88:53 | {"subnet_id": "7d5eafc1-2c10-410d-a8e0-1dc648969fcd", "ip_address": "192.168.100.1"} |

+--------------------------------------+------+-------------------+----------------------------------------------------------------------------------------+

Show Port of DHCP Service

root@node-6+-----------------------+--------------------------------------------------------------------------------------+

| Field | Value |

+-----------------------+--------------------------------------------------------------------------------------+

| admin_state_up | True |

| allowed_address_pairs | |

|

| device_id | dhcp7a15cee0-2af1-5441-b1dc-94897ef4dee9-16b6d042-ce8b-4020-82e0-86a6829a3978 |

| device_owner | network

| extra_dhcp_opts | |

| fixed_ips | {"subnet_id": "7d5eafc1-2c10-410d-a8e0-1dc648969fcd", "ip_address": "192.168.100.2"} |

| id | 74d4769b-5b7e-4523-ba34-64672d4ac8f1 |

| mac_address | fa

|

| network_id | 16b6d042-ce8b-4020-82e0-86a6829a3978 |

| security_groups | |

|

| tenant_id | fc48558ea8684d14a1da30f6c5028064 |

+-----------------------+--------------------------------------------------------------------------------------+

Reference: