Monday, July 9, 2018

[TensorFlow] How to implement LMDBDataset in tf.data API?

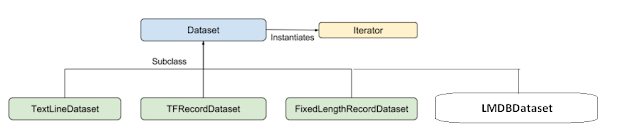

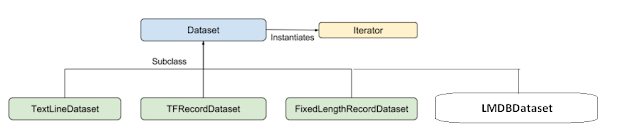

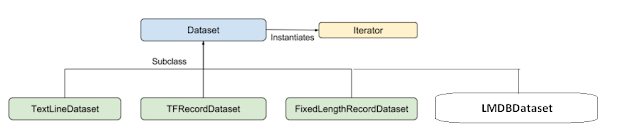

I have finished implementing the LMDBDataset in tf.data API. It could be not the bug-free component, but at least it's my first time to try to implement C++ and Python function in TensorFlow. The API architecture looks like this:

Thursday, July 5, 2018

[TensorFlow] How to build your C++ program or application with TensorFlow library using CMake

When you want to build your C++ program or application using TensorFlow library or functions, you probably will encounter some header file missed issues or linking problems. Here is the step list that I have verified and it works well.

1. Prepare TensorFlow ( v1.10) and its third party's library

2. Modify .tf_.tf_configure.bazelrc

1. Prepare TensorFlow ( v1.10) and its third party's library

$ git clone --recursive https://github.com/tensorflow/tensorflow

$ cd tensorflow/contrib/makefile

$ ./build_all_linux.sh

2. Modify .tf_.tf_configure.bazelrc

$ cd tensorflow/

$ vim .tf_configure.bazelrc

append this line in the bottom of the file

==>

build --define=grpc_no_ares=true

Wednesday, June 27, 2018

[XLA JIT] How to turn on XLA JIT compilation at multiple GPUs training

Before I discuss this question, let's recall how to turn on XLA JIT compilation and use it in TensorFlow python API.

1. Session

Turning on JIT compilation at the session level will result in all possible operators being greedily compiled into XLA computations. Each XLA computation will be compiled into one or more kernels for the underlying device.

1. Session

Turning on JIT compilation at the session level will result in all possible operators being greedily compiled into XLA computations. Each XLA computation will be compiled into one or more kernels for the underlying device.

Monday, June 25, 2018

[PCIe] How to read/write PCIe Switch Configuration Space?

Thursday, June 21, 2018

[TensorFlow] How to get CPU configuration flags (such as SSE4.1, SSE4.2, and AVX...) in a bash script for building TensorFlow from source

The AVX and SSE4.2 and others are offered by Intel CPU. (AVX and SSE4.2 are CPU infrastructures for faster matrix computations) Did you wonder what CPU configuration flags (such as SSE4.1, SSE4.2, and AVX...) you should use on your machine when building Tensorflow from source? If so, here is a quick solution for you.

[TensorFlow 記憶體優化實驗] Compare the memory options in Grappler Memory Optimizer

As we know that in Tensorflow, there is an optimization module called "Grappler". It provides many kinds of optimization functionalities, such as: Layout, Memory, ModelPruner, and so on... In this experiment, we can see the effect of some memory options enabled in a simple CNN model using MNIST dataset.

Thursday, June 14, 2018

[XLA 研究] How to use XLA AOT compilation in TensorFlow

This document is going to explain how to use AOT compilation in TensorFlow. We will use the tool: tfcompile, which is a standalone tool that ahead-of-time (AOT) compiles TensorFlow graphs into executable code. It can reduce the total binary size, and also avoid some runtime overheads. A typical use-case of tfcompile is to compile an inference graph into executable code for mobile devices. The following steps are as follows:

1. Build tool: tfcompile

1. Build tool: tfcompile

> bazel build --config=opt --config=cuda //tensorflow/compiler/aot:tfcompile

Subscribe to:

Posts (Atom)