0. 相關初始設定

# 查看到我們名下的遠端項目

#獲取upstream的最新版本

git fetch upstream

#將upstream merge到我們當前分支

git merge upstream/master

Trouble Shooting

1. 先刪除分支然後上傳Tag:

git branch -D testtag

或是

2. 先刪除tag然後上傳分支:

git tag -d testtag

0. 相關初始設定

1. 先刪除分支然後上傳Tag:

git branch -D testtag

或是

2. 先刪除tag然後上傳分支:

git tag -d testtag

目前使用此fluent client-go API,將資料傳入Fluentd,

會使用其Async模式來避免後續的latency event被block住, 當Fluentd server有問題時:// Use "Async" to enable asynchronous I/O (connect and write)

// for sending events to Fluentd without blocking

setting.FluentdLogger.Logger, errF = fluent.New(

fluent.Config{Async: true, FluentHost: fluenthost, FluentPort: intFluentPort})

if errF != nil {

fmt.Println("[ERROR]:", errF)

setting.FluentdLogger.Enabled = false

}P.S: When Fluentd server has a problem, the events which are going to send will be buffered on the memory. The default size is 8192BufferLimit

The example of using fluent-logger-golang to send data:Sets the number of events buffered on the memory.

tag := "apm.latency"

var data = map[string]string{

"mytimestamp": strconv.FormatInt(time.Now().Unix(), 10),

"mydata": "hoge",

"myjob": "apm",

"myvalue": "55.55",

}

error := logger.Post(tag, data)FluentdFluentd安裝,請參考官網

https://docs.fluentd.org/installation/install-by-deb

Fluentd操作

# 啟動/關閉/查看服務

sudo systemctl start td-agent.service

sudo systemctl stop td-agent.service

sudo systemctl status td-agent.service

sudo systemctl restart td-agent.service

#修改Fluentd設定

vi /etc/td-agent/td-agent.conf

#查看Fluentd logs

cat /var/log/td-agent/td-agent.log2.0 以上的InfluxDB 會需要token,Client端才有權限讀寫,其中一種方式查出Token是用InfluxDB自己的Web http://<your ip address>:8086,登入後點選"Data"

例如:

選擇GO

可以看到 token的值

// You can generate a Token from the "Tokens Tab" in the UI const token = "Iiq0TiIpL9lXn2GATwh3WeZBkLq-SEul6C0yrKLjq4T4WZ9b0BKVAsFeNs8q0Is93SMbhF0l63s4DwJja4MSbw=="

Fluentd需要此Plugin來對應寫資料到InfluxDB2

我目前用的: The configuration of /etc/td-agent/td-agent.conf

#<match apm.**>

# @type stdout

#</match>

<match apm.**>

@type copy

<store>

@type influxdb2

url <https://localhost:8086>

token Iiq0TiIpL9lXn2GATwh3WeZBkLq-SEul6C0yrKLjq4TXXXX

use_ssl false

bucket apm

org com

time_precision s

tag_keys ["mytimestamp","mydata"]

field_keys ["myvalue"]

</store>

<store>

@type stdout

</store>

</match>The result in InfluxDB

安裝此plugin:

sudo td-agent-gem install fluent-plugin-influxdb-v2

sudo td-agent-gem uninstall fluent-plugin-influxdb-v2fluent-plugin-influxdb is a buffered output plugin for fluentd and influxDB.

Configuration Example

<match apm.**>

@type influxdb

host localhost

port 8086

dbname apm

user danny

password xxxxxxxxx

use_ssl false

time_precision s

tag_keys ["timestamp", "data"]

sequence_tag _seq

</match>安裝 plugin:

sudo td-agent-gem install fluent-plugin-influxdb

sudo td-agent-gem uninstall fluent-plugin-influxdb[warn]: #0 failed to flush the buffer. retry_time=7 next_retry_seconds=2021-04-22 14:28:33 14429497340994114529/137438953472000000000 +0800 chunk="5c089be4bdfb9a28fb4b5ac58228a90 7" error_class=InfluxDB2::InfluxError error="failure writing points to database: partial write: points beyond retention policy dropped=1" 2021-04-22 14:27:22 +0800 [warn]: #0 suppressed same stacktrace

https://stackoverflow.com/questions/54359348/unable-to-insert-data-in-influxdb

var usr = "danny", age = 18;

//ECMAScript 6, ES 6

const LED_PIN = 13; //宣告常數, 不能修改

//primitive type

//Boolean: true or false

//Number: 3.14

//String: "AAA"

//Null: null

//Undefined: undefined

//字串轉換成數自函式

Number("8.24") -> 8.24

Number("123abc") -> NaN

parseInt("8.24") -> 8

parseInt("123abc") -> 123

parseFloat("8.24") -> 8.24

var num = 0.1 * 0.2;

num.toPrecision(12) //精確度縮限制小數點12位

//嚴格相等運算子 ===

// 8 === "8"=> false

// 8 == "8" => true

x = ( x === undefined) ? 0 : x;

//在函式內以var宣告的變數, 都是區域變數, 以外定義的變數都是全域變數

//每隔5秒執行一次

window.setInterval(function() {

// do something

}, 5000);

//Array

var she = ["AAA", "BBB"];

var she = new Array("AAA","BBB");

var she = new Array(3); //三個元素空白陣列

var she = [];

she.push("CCC"); //後面添加新元素

she.pop(); //刪除最後一個元素並傳回

she.unshift("DDD"); //在陣列前最前面加入新元素

she.shift(); //刪除並傳回第一個元素

she.splice(1,1); //在index=1的位置刪除一個元素

she.splice(1,1, "EEE", "FFF"); //在index=1的位置刪除一個元素,並加入兩個新元素

//for迴圈

for(var i=0; i<total; i++) {

}

she.forEach( function(val) {

});

//Object

var obj = {name:"Danny", age:18};

delete obj.name; // delete指令僅能刪除物件的屬性

for( var key in obj){

var val = obj[key];

console.log("attr:" + key + ",value:" + val);

}

###Step 1: Install the CLI###

curl -sL run.linkerd.io/install | sh

export PATH=$PATH:/home/liudanny/.linkerd2/bin

###Step 2: Validate your Kubernetes cluster###

linkerd check --pre

###Step 3: Install the control plane onto your cluster###

linkerd install | kubectl apply -f -

linkerd check

linkerd viz install | kubectl apply -f - # on-cluster metrics stack

###Step 4: Explore Linkerd

linkerd viz dashboard &

linkerd -n **linkerd-viz** viz top deploy/web

###Step 5: Install the demo app###

curl -sL <https://run.linkerd.io/emojivoto.yml> \\

| kubectl apply -f -

#add Linkerd to emojivoto by running

kubectl get -n emojivoto deploy -o yaml \\

| linkerd inject - \\

| kubectl apply -f -

linkerd -n emojivoto check --proxy

###Step 6: Watch it run###

linkerd -n emojivoto viz stat deploy

linkerd -n emojivoto viz top deploy

linkerd -n emojivoto viz tap deploy/web

###Step 7 (uninstall)###

kubectl get -n emojivoto deploy -o yaml \\

| linkerd uninject - \\

| kubectl apply -f -

curl -sL <https://run.linkerd.io/emojivoto.yml> \\

| kubectl delete -f -

linkerd viz uninstall | kubectl delete -f -

linkerd uninstall | kubectl delete -f -

###測試自己的namespace

$kubectl get -n default deploy -o yaml \\

| linkerd inject - \\

| kubectl apply -f -

$kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

#uninject

$kubectl get -n default deploy -o yaml \\

| linkerd uninject - \\

| kubectl apply -f -

### not work!!!

$kubectl get -n default pod -o yaml \\

| linkerd inject - \\

| kubectl apply -f -

#uninject

$kubectl get -n default pod -o yaml \\

| linkerd uninject - \\

| kubectl apply -f -

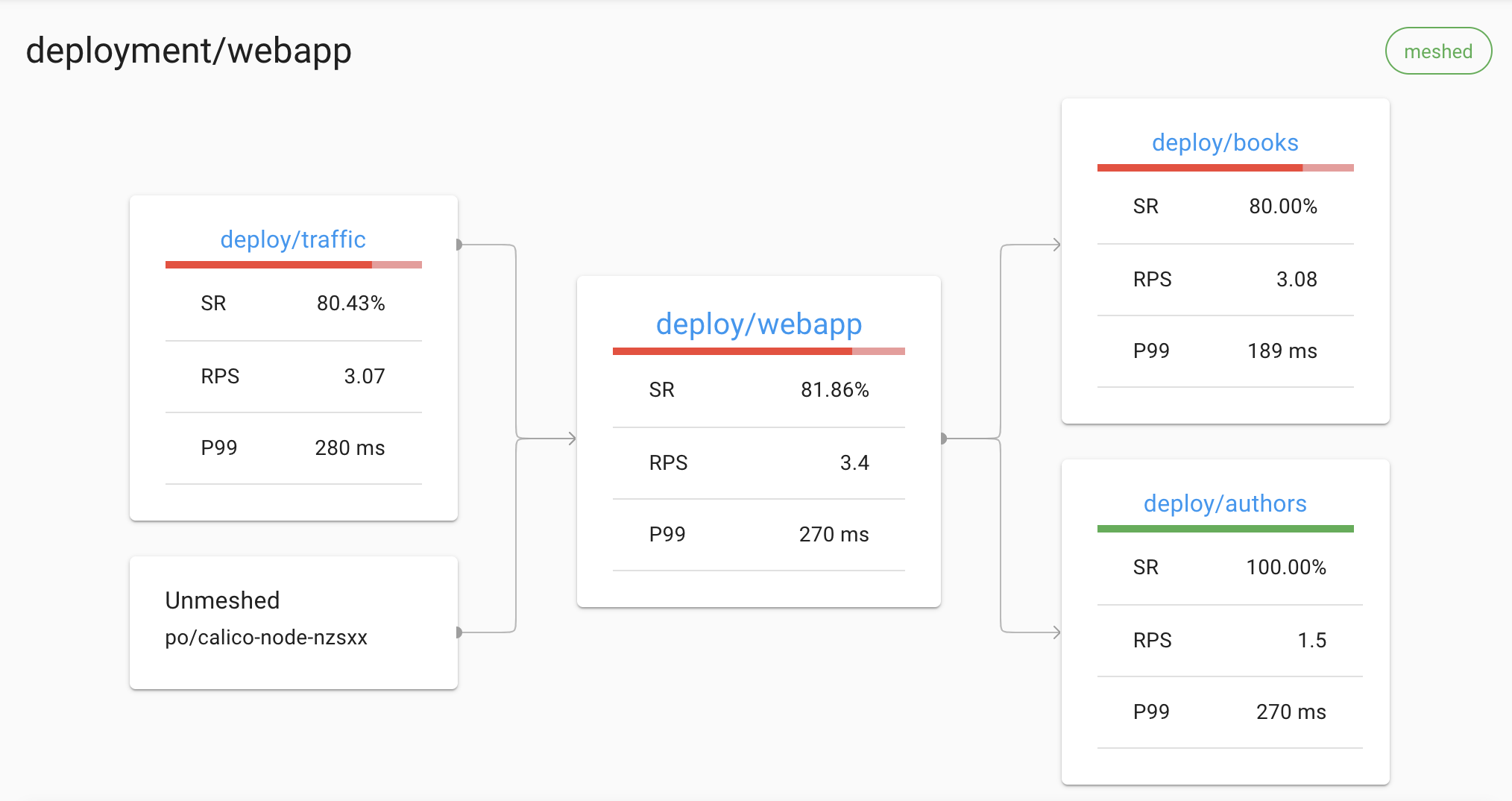

View the Linkerd dashboard and see all the services in the demo app. Since the demo app comes with a load generator, we can see live traffic metrics HTTP/2(gRPC)和HTTP/1(web frontend) by running: linkerd -n emojivoto viz stat deploy

This will show the “golden” metrics for each deployment:

Inject function is very convenient for users.

There is no service perspective for metrics, but deployment/Pods/... can kind of cover this.

$ k get all -n emojivoto

NAME READY STATUS RESTARTS AGE

pod/emoji-696d9d8f95-p2xv5 2/2 Running 0 64m

pod/vote-bot-6d7677bb68-tmbfq 2/2 Running 0 64m

pod/voting-ff4c54b8d-whssp 2/2 Running 0 64m

pod/web-5f86686c4d-l8lcb 2/2 Running 0 64m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/emoji-svc ClusterIP 10.106.16.101 <none> 8080/TCP,8801/TCP 105m

service/voting-svc ClusterIP 10.109.94.225 <none> 8080/TCP,8801/TCP 105m

service/web-svc ClusterIP 10.100.247.154 <none> 80/TCP 105m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/emoji 1/1 1 1 105m

deployment.apps/vote-bot 1/1 1 1 105m

deployment.apps/voting 1/1 1 1 105m

deployment.apps/web 1/1 1 1 105m

NAME DESIRED CURRENT READY AGE

replicaset.apps/emoji-66ccdb4d86 0 0 0 105m

replicaset.apps/emoji-696d9d8f95 1 1 1 64m

replicaset.apps/vote-bot-69754c864f 0 0 0 105m

replicaset.apps/vote-bot-6d7677bb68 1 1 1 64m

replicaset.apps/voting-f999bd4d7 0 0 0 105m

replicaset.apps/voting-ff4c54b8d 1 1 1 64m

replicaset.apps/web-5f86686c4d 1 1 1 64m

replicaset.apps/web-79469b946f 0 0 0 105m

$ linkerd -n emojivoto viz stat deploy

NAME MESHED SUCCESS RPS LATENCY_P50 LATENCY_P95 LATENCY_P99 TCP_CONN

emoji 1/1 100.00% 2.3rps 1ms 1ms 1ms 3

vote-bot 1/1 100.00% 0.3rps 1ms 1ms 1ms 1

voting 1/1 87.01% 1.3rps 1ms 1ms 2ms 3

web 1/1 91.91% 2.3rps 2ms 16ms 19ms 3

$ linkerd -n emojivoto viz top deploy

(press q to quit)

(press a/LeftArrowKey to scroll left, d/RightArrowKey to scroll right)

Source Destination Method Path Count Best Worst Last Success Rate

web-5f86686c4d-l8lcb emoji-696d9d8f95-p2xv5 POST /emojivoto.v1.EmojiService/ListAll 42 562µs 5ms 937µs 100.00%

vote-bot-6d7677bb68-tmbfq web-5f86686c4d-l8lcb GET /api/list 42 3ms 13ms 9ms 100.00%

web-5f86686c4d-l8lcb emoji-696d9d8f95-p2xv5 POST /emojivoto.v1.EmojiService/FindByShortcode 42 553µs 13ms 2ms 100.00%

vote-bot-6d7677bb68-tmbfq web-5f86686c4d-l8lcb GET /api/vote 41 5ms 21ms 6ms 87.80%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteDoughnut 6 926µs 3ms 2ms 0.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteMrsClaus 4 1ms 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteRocket 2 2ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VotePointUp2 2 2ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteCrossedSwords 2 2ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteWorldMap 2 739µs 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteDog 2 2ms 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteOkWoman 2 951µs 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteNerdFace 2 2ms 9ms 9ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteConstructionWorkerMan 2 1ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteCheckeredFlag 2 863µs 3ms 863µs 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/Vote100 2 894µs 2ms 894µs 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VotePizza 2 1ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteJackOLantern 2 884µs 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteManDancing 2 761µs 1ms 1ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteBeachUmbrella 2 1ms 2ms 1ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteNoGoodWoman 2 1ms 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteGuardsman 2 968µs 2ms 2ms 100.00%

**$ linkerd -n emojivoto viz tap deploy/web**

req id=11:0 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/list

req id=11:1 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/ListAll

rsp id=11:1 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1792µs

end id=11:1 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=193µs response-length=2140B

rsp id=11:0 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=4294µs

end id=11:0 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=358µs response-length=4513B

req id=11:2 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/vote

req id=11:3 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/FindByShortcode

rsp id=11:3 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1657µs

end id=11:3 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=175µs response-length=25B

req id=11:4 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :method=POST :authority=voting-svc.emojivoto:8080 :path=/emojivoto.v1.VotingService/VoteDoughnut

rsp id=11:4 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :status=200 latency=2217µs

end id=11:4 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true grpc-status=Unknown duration=161µs response-length=0B

rsp id=11:2 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=500 latency=8272µs

end id=11:2 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=171µs response-length=51B

req id=11:5 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/list

req id=11:6 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/ListAll

rsp id=11:6 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1483µs

end id=11:6 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=145µs response-length=2140B

rsp id=11:5 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=5621µs

end id=11:5 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=336µs response-length=4513B

req id=11:7 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/vote

req id=11:8 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/FindByShortcode

rsp id=11:8 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1477µs

end id=11:8 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=206µs response-length=28B

req id=11:9 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :method=POST :authority=voting-svc.emojivoto:8080 :path=/emojivoto.v1.VotingService/VoteManDancing

rsp id=11:9 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :status=200 latency=1560µs

end id=11:9 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true grpc-status=OK duration=144µs response-length=5B

rsp id=11:7 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=7140µs

end id=11:7 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=96µs response-length=0B

req id=11:10 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/list

req id=11:11 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/ListAll

rsp id=11:11 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1855µs

end id=11:11 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=375µs response-length=2140B

rsp id=11:10 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=3786µs

end id=11:10 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=339µs response-length=4513B

req id=11:12 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/voteLinkerd can proxy all TCP connections, and will automatically enable advanced features (including metrics, load balancing, retries, and more) for HTTP, HTTP/2, and gRPC connections.

Linkerd is capable of proxying all TCP traffic, including TLS connections, WebSockets, and HTTP tunneling.

In most cases, Linkerd can do this without configuration. To do this, Linkerd performs protocol detection to determine whether traffic is HTTP or HTTP/2 (including gRPC). If Linkerd detects that a connection is HTTP or HTTP/2, Linkerd will automatically provide HTTP-level metrics and routing.

If Linkerd cannot determine that a connection is using HTTP or HTTP/2**, Linkerd will proxy the connection as a plain TCP connection, applying mTLS and providing byte-level metrics** as usual.

Note Client-initiated HTTPS will be treated as TCP, not as HTTP, as Linkerd will not be able to observe the HTTP transactions on the connection.

( We ITRI currently get TCP info from Layer 4 Network Stack in Kernel )

In some cases, Linkerd’s protocol detection cannot function because it is not provided with enough client data. This can result in a 10-second delay in creating the connection as the protocol detection code waits for more data. This situation is often encountered when using “server-speaks-first” protocols, or protocols where the server sends data before the client does, and can be avoided by supplying Linkerd with some additional configuration.

There are two basic mechanisms for configuring protocol detection: opaque ports and skip ports. Marking a port as opaque instructs Linkerd to proxy the connection as a TCP stream and not to attempt protocol detection. Marking a port as skip bypasses the proxy entirely.

By default, Linkerd automatically marks some ports as opaque, including the default ports for SMTP, MySQL, PostgresQL, and Memcache. Services that speak those protocols, use the default ports, and are inside the cluster do not need further configuration.

The following table summarizes some common server-speaks-first protocols and the configuration necessary to handle them. The “on-cluster config” column refers to the configuration when the destination is on the same cluster; the “off-cluster config” to when the destination is external to the cluster.

some common server-speaks-first protocols

Automatic retries are one the most powerful and useful mechanisms a service mesh has for gracefully handling partial or transient application failures.

Timeouts work hand in hand with retries. Once requests are retried a certain number of times, it becomes important to limit the total amount of time a client waits before giving up entirely. Imagine a number of retries forcing a client to wait for 10 seconds.

By default, Linkerd automatically enables mutual Transport Layer Security (mTLS) for most TCP traffic between meshed pods, by establishing and authenticating secure, private TLS connections between Linkerd proxies. This means that Linkerd can add authenticated, encrypted communication to your application with very little work on your part.

One of Linkerd’s most powerful features is its extensive set of tooling around observability—the measuring and reporting of observed behavior in meshed applications

To gain access to Linkerd’s observability features you only need to install the Viz extension:

linkerd viz install | kubectl apply -f -

Linkerd’s telemetry and monitoring features function automatically, without requiring any work on the part of the developer. These features include:

This data can be consumed in several ways:

linkerd viz stat and linkerd viz routes.Success Rate

This is the percentage of successful requests during a time window (1 minute by default).

In the output of the command linkerd viz routes -o wide, this metric is split into EFFECTIVE_SUCCESS and ACTUAL_SUCCESS. For routes configured with retries, the former calculates the percentage of success after retries (as perceived by the client-side), and the latter before retries (which can expose potential problems with the service).

Traffic (Requests Per Second)

This gives an overview of how much demand is placed on the service/route. As with success rates, linkerd viz routes --o wide splits this metric into EFFECTIVE_RPS and ACTUAL_RPS, corresponding to rates after and before retries respectively.

Latencies ( We ITRI's latency is defined as time to client → server → client . We also have service's response time)

Times taken to service requests per service/route are split into 50th, 95th and 99th percentiles. Lower percentiles give you an overview of the average performance of the system, while tail percentiles help catch outlier behavior.

For HTTP, HTTP/2, and gRPC connections, Linkerd automatically load balances requests across all destination endpoints without any configuration required. (For TCP connections, Linkerd will balance connections.)

Linkerd uses an algorithm called EWMA, or exponentially weighted moving average, to automatically send requests to the fastest endpoints. This load balancing can improve end-to-end latencies.

Service discovery

For destinations that are not in Kubernetes, Linkerd will balance across endpoints provided by DNS.

For destinations that are in Kubernetes, Linkerd will look up the IP address in the Kubernetes API. If the IP address corresponds to a Service, Linkerd will load balance across the endpoints of that Service and apply any policy from that Service’s Service Profile. On the other hand, if the IP address corresponds to a Pod, Linkerd will not perform any load balancing or apply any Service Profiles.

Load balancing gRPC

Linkerd’s load balancing is particularly useful for gRPC (or HTTP/2) services in Kubernetes, for which Kubernetes’s default load balancing is not effective.

Linkerd automatically adds the data plane proxy to pods when the linkerd.io/inject: enabled annotation is present on a namespace or any workloads, such as deployments or pods. This is known as “proxy injection”. ( Here a lot of details in Adding Your Services to Linkerd)

Details

Proxy injection is implemented as a Kubernetes admission webhook. This means that the proxies are added to pods within the Kubernetes cluster itself, regardless of whether the pods are created by kubectl, a CI/CD system, or any other system.

For each pod, two containers are injected:

linkerd-init, a Kubernetes Init Container that configures iptables to automatically forward all incoming and outgoing TCP traffic through the proxy. (Note that this container is not present if the Linkerd CNI Plugin has been enabled.)linkerd-proxy, the Linkerd data plane proxy itself.Note that simply adding the annotation to a resource with pre-existing pods will not automatically inject those pods. You will need to update the pods (e.g. with kubectl rollout restart etc.) for them to be injected. This is because Kubernetes does not call the webhook until it needs to update the underlying resources.

Linkerd installs can be configured to run a CNI plugin that rewrites each pod’s iptables rules automatically. Rewriting iptables is required for routing network traffic through the pod’s linkerd-proxy container. When the CNI plugin is enabled, individual pods no longer need to include an init container that requires the NET_ADMIN capability to perform rewriting. This can be useful in clusters where that capability is restricted by cluster administrators.

(We ITRI don't require code changes. We provide the downstream of related trajectories )

Linkerd can be configured to emit trace spans from the proxies, allowing you to see exactly what time requests and responses spend inside.

Unlike most of the features of Linkerd, distributed tracing requires both code changes and configuration. (You can read up on Distributed tracing in the service mesh: four myths for why this is.)

Furthermore, Linkerd provides many of the features that are often associated with distributed tracing, without requiring configuration or application changes, including:

For example, Linkerd can display a live topology of all incoming and outgoing dependencies for a service, without requiring distributed tracing or any other such application modification:

The Linkerd dashboard showing an automatically generated topology graph

The main content is from here:

https://github.com/intel/sriov-network-device-plugin