Wednesday, January 20, 2016

[Git] Meld - The difftool

Setup git default

Tuesday, January 19, 2016

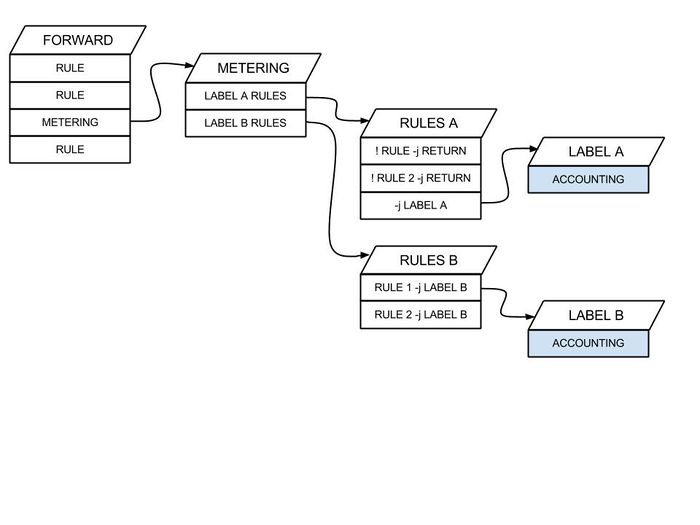

[Ceilometer] To collect the bandwidth of Neutron L3 router

From this

We can use the neutron command to list the related metering rules and labels

$ neutron meter-label-list

$ neutron meter-label-rule-list

The "neutron-meter-agent" will collect the traffic accounting in the

root@sn1

root@sn1

root@sn1

root@node-5

root@node-5

root@node-5

So, we need to find the metering labels: "neutron-meter-l-78675a84" and "neutron-meter-l-b88c5977"

root@cn3:~# ip netns exec qrouter-b1741371-ee12-46a1-831b-d3b35429d7c8 iptables -nL -v -x

root@cn3:~# ip netns exec qrouter-b1741371-ee12-46a1-831b-d3b35429d7c8 iptables -t nat -S

Here is the example to query the metering data in Ceilometer

$ root@node-5:~# ceilometer statistics -m bandwidth -q "resource=b88c5977-4445-4f19-9c8f-3d92809f844e;timestamp>=2016-03-01T00:00:00" --period 86400

P.S:

The following article is to introduce "Traffic Accounting with Linux IPTables" which can make us

http://www.catonmat.net/blog/traffic-accounting-with-iptables/

https://wiki.openstack.org/wiki/Neutron/Metering/Bandwidth

Friday, January 15, 2016

[Apache2] To increase the concurrent request number of apache server

This post is just the memo for my a little task. Our web app is a Django app in

Icurrently tested our several RESTful APIs and found the maximum concurrent request number is not good enough (around 350) so that I try to tune it to the accepted level, for instance: more than 500.

I also found that if the concurrent request is bigger than the number (350), then I will often see the apache benchmark tool showing the error: apr_socket_recv: Connection reset by peer (104), which means web server suddenly disconnected in the middle of the session.

So, there are two things coming up to my mind: Apache2 configuration and Linux tuning.

For Apache2:

I follow this document to change the mpm_worker. conf

Thedefulat

ServerLimit

StartServers 2

MaxClients 150

MinSpareThreads

MaxSpareThreads

ThreadsPerChild

What I changed in my mpm_worker. conf

vi . conf

<IfModule

ServerLimit

StartServers 4

MaxClients 1000

MinSpareThreads

MaxSpareThreads

ThreadLimit

ThreadsPerChild

MaxRequestWorkers

MaxConnectionsPerChild

</IfModule

For Linux tuning:

I follow this document to enlarge the system variables.

I

I also found that if the concurrent request is bigger than the number (350), then I will often see the apache benchmark tool showing the error: apr_socket_recv: Connection reset by peer (104), which means web server suddenly disconnected in the middle of the session.

So, there are two things coming up to my mind: Apache2 configuration and Linux tuning.

For Apache2:

I follow this document to change the mpm_worker

The

StartServers 2

MaxClients 150

What I changed in my mpm_worker

<

StartServers 4

MaxClients 1000

</

For Linux tuning:

I follow this document to enlarge the system variables.

sysctl . . sysctl net.core.netdev_max_backlog=2000

sysctl net.ipv4.tcp_max_syn_backlog=2048

Tuesday, December 22, 2015

[Django] The question of changing urlpattern dynamically

A couple of days my colleague gave me a quesiont about how to change urlpattern dynamically in Django. Well, I indeed take some time to survey the way to do so even though we figure out alternatives to achieve the same result that we want. So, the following list is about the solution:

http://stackoverflow.com/questions/8771070/unable-dynamic-changing-urlpattern-when-changing-database

Iperfer to adopt using middleware class to resolve this problem as follows:

An alternative method would be to either create a super pattern that calls a view, which in turn makes a DB call. Another approach is to handle this in a middleware class where you test for a 404 error, check if the pattern is likely to be one of your categories, andthen do the DB look up there. I have done this in the past and it's not as bad as it sounds. Look at the

For how to use middleware class, there is another link for the reference.

http://stackoverflow.com/questions/753909/django-middleware-urls

http://stackoverflow.com/questions/8771070/unable-dynamic-changing-urlpattern-when-changing-database

I

An alternative method would be to either create a super pattern that calls a view, which in turn makes a DB call. Another approach is to handle this in a middleware class where you test for a 404 error, check if the pattern is likely to be one of your categories, and

django /contrib/flatpagesFor how to use middleware class, there is another link for the reference.

http://stackoverflow.com/questions/753909/django-middleware-urls

Friday, December 18, 2015

[IOMMU] The error of "VFIO group is not viable"

As my previous article mentioned, DPDK is a library to accelerate packet transmission between user space application and physical network device. Recently there is an article "Using Open vSwitch similiar

But, Ienounter openvswitch with

http://vfio.blogspot.tw/2014/08/vfiovga-faq.html

Question 1:

I get the following error when attempting to start theguest

There are more devices in the IOMMU groupthan assigning vfio vfio pci pci

So, the solutions are these two:

1. Install the device into a different slot

2.Bypass ACS using the ACS overrides patch

For the more explanation in details, you could see this article:

http://vfio.blogspot.tw/2014/08/iommu-groups-inside-and-out.html

The following Scripts are the summaryfrom the article:

But, I

http://vfio.blogspot.tw/2014/08/vfiovga-faq.html

Question 1:

I get the following error when attempting to start the

Answer:: error, group $GROUP is not viable, please ensure all devices within the iommu_group are bound to their vfio bus driver. vfio

There are more devices in the IOMMU group

So, the solutions are these two:

1. Install the device into a different slot

2.

For the more explanation in details, you could see this article:

http://vfio.blogspot.tw/2014/08/iommu-groups-inside-and-out.html

The following Scripts are the summary

# Update Grub GRUB_CMDLINE_LINUX_DEFAULT="... default_hugepagesz=1G hugepagesz=1G hugepages=16 hugepagesz=2M hugepages=2048 iommu=pt intel_iommu=on isolcpus=1-13,15-27" update-grub # Export Variables export OVS_DIR=/root/soucecode/ovs export DPDK_DIR=/root/soucecode/dpdk-2.1.0 export LC_ALL=en_US.UTF-8 export LANG=en_US.UTF-8 locale-gen "en_US.UTF-8" dpkg-reconfigure locales # build DPDK curl -O http://dpdk.org/browse/dpdk/snapshot/dpdk-2.1.0.tar.gz tar -xvzf dpdk-2.1.0.tar.gz cd $DPDK_DIR sed 's/CONFIG_RTE_BUILD_COMBINE_LIBS=n/CONFIG_RTE_BUILD_COMBINE_LIBS=y/' -i config/common_linuxapp make install T=x86_64-ivshmem-linuxapp-gcc cd $DPDK_DIR/x86_64-ivshmem-linuxapp-gcc EXTRA_CFLAGS="-g -Ofast" make -j10 # build OVS git clone https://github.com/openvswitch/ovs.git cd $OVS_DIR ./boot.sh ./configure --with-dpdk="$DPDK_DIR/x86_64-ivshmem-linuxapp-gcc/" CFLAGS="-g -Ofast" make 'CFLAGS=-g -Ofast -march=native' -j10 # Setup OVS pkill -9 ovs rm -rf /usr/local/var/run/openvswitch rm -rf /usr/local/etc/openvswitch/ rm -f /usr/local/etc/openvswitch/conf.db mkdir -p /usr/local/etc/openvswitch mkdir -p /usr/local/var/run/openvswitch cd $OVS_DIR ./ovsdb/ovsdb-tool create /usr/local/etc/openvswitch/conf.db ./vswitchd/vswitch.ovsschema ./ovsdb/ovsdb-server --remote=punix:/usr/local/var/run/openvswitch/db.sock --remote=db:Open_vSwitch,Open_vSwitch,manager_options --pidfile --detach ./utilities/ovs-vsctl --no-wait init # Check IOMMU & Huge info cat /proc/cmdline grep Huge /proc/meminfo # Setup Hugetable mkdir -p /mnt/huge mkdir -p /mnt/huge_2mb mount -t hugetlbfs hugetlbfs /mnt/huge mount -t hugetlbfs none /mnt/huge_2mb -o pagesize=2MB # Bind Ether card modprobe vfio-pci cd $DPDK_DIR/tools ./dpdk_nic_bind.py --status ./dpdk_nic_bind.py --bind=vfio-pci 08:00.2 # Start OVS modprobe openvswitch $OVS_DIR/vswitchd/ovs-vswitchd --dpdk -c 0x2 -n 4 --socket-mem 2048 -- unix:/usr/local/var/run/openvswitch/db.sock --pidfile --detach # Setup dpdk vhost user port $OVS_DIR/utilities/ovs-vsctl show $OVS_DIR/utilities/ovs-vsctl add-br br0 -- set bridge br0 datapath_type=netdev $OVS_DIR/utilities/ovs-vsctl add-port br0 dpdk0 -- set Interface dpdk0 type=dpdk $OVS_DIR/utilities/ovs-vsctl add-port br0 vhost-user1 -- set Interface vhost-user1 type=dpdkvhostuser $OVS_DIR/utilities/ovs-vsctl add-port br0 vhost-user2 -- set Interface vhost-user2 type=dpdkvhostuser find /sys/kernel/iommu_groups/ -type l # Create VMs qemu-system-x86_64 -m 1024 -smp 4 -cpu host -hda /tmp/vm1.qcow2 -boot c -enable-kvm -no-reboot -nographic -net none \ -chardev socket,id=char1,path=/usr/local/var/run/openvswitch/vhost-user1 \ -netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce \ -device virtio-net-pci,mac=00:00:00:00:dd:01,netdev=mynet1 \ -object memory-backend-file,id=mem,size=1024M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem -mem-prealloc qemu-system-x86_64 -m 1024 -smp 4 -cpu host -hda /tmp/vm2.qcow2 -boot c -enable-kvm -no-reboot -nographic -net none \ -chardev socket,id=char1,path=/usr/local/var/run/openvswitch/vhost-user2 \ -netdev type=vhost-user,id=mynet1,chardev=char1,vhostforce \ -device virtio-net-pci,mac=00:00:00:00:dd:02,netdev=mynet1 \ -object memory-backend-file,id=mem,size=1024M,mem-path=/dev/hugepages,share=on \ -numa node,memdev=mem -mem-prealloc

Tuesday, December 1, 2015

[Django] How to upload a file to via Django REST Framework?

Here is an example to use Parser: MultiPartParser to parse the media type: multipart/form-data. You also can take a look at this for more in detail: http://www.django-rest-framework.org/api-guide/parsers

Client Side via curl:( Basically you

Command format

curl : : : ip }

for

curl : . yaml u danny :

Server side( )

in . py

==>

'DEFAULT_PARSER_CLASSES': (

'rest_framework. .

)

For your_api. py

class (

permission_classes = (IsAuthenticated, IsAdminOrReadOnly)

parser_classes = (MultiPartParser,)

def (

try

# Read uploaded files

my_file = request. [

filename str (

with (

for chunk . (

temp_file. (

temp_file. (

except

return ( .

return ( .

P.S: Please change 'data' to others if you put the different variable name in curl command!!

Reference:

http://stackoverflow.com/questions/21012538/retrieve-json-from-request-files-in-django-without-writing-to-file

Client Side via curl:

Server side

==>

'DEFAULT_PARSER_CLASSES': (

'rest_framework

)

For your_api

permission_classes = (IsAuthenticated, IsAdminOrReadOnly)

parser_classes = (MultiPartParser,)

# Read uploaded files

my_file = request

temp_file

temp_file

P.S: Please change 'data' to others if you put the different variable name in curl command!!

Reference:

http://stackoverflow.com/questions/21012538/retrieve-json-from-request-files-in-django-without-writing-to-file

If you want to get the content of uploaded file , you can directly use theapi .

. read ( )

Something like:

if request. FILES. has_key( 'data'):

file = request. Files[ 'data']

data = file. read ( )

#you have file contents in data

[Virtual Switching] What are macvlan and macvtap?

I excerpt the content of the following URL about what macvlan and macvtap are for reference quickly.

https://www.netdev01.org/docs/netdev_tutorial_bridge_makita_150213.pdf

macvlan

VLAN using not 802.1Qtag but mac address

• 4 types ofmode

•private

•vepa

•bridge

•passthru

Usingunicast filtering if supported, instead of promiscuous mode (except for passthru )

•Unicast filtering allows

NIC to receive multiplemac addresses

• Light weight bridge

• No source learning

• No STP

Only one uplink

Allow traffic betweenmacvlans (via macvlan stack)

macvtap

with KVM has three mode

Private

vepa

bridge

tap -like macvlan variant

•packet reception

->file read

•file write

->packet transmission

Further reading:

http://140.120.15.179/Presentation/20150203/

Virtual networking: TUN/TAP, MacVLAN, and MacVTap

https://www.netdev01.org/docs/netdev_tutorial_bridge_makita_150213.pdf

macvlan

VLAN using not 802.1Q

• 4 types of

•

•

•

•

Using

•

NIC to receive multiple

• Light weight bridge

• No source learning

• No STP

Only one uplink

Allow traffic between

macvtap

Private

•

->

•

->

Further reading:

http://140.120.15.179/Presentation/20150203/

Virtual networking: TUN/TAP, MacVLAN, and MacVTap

Friday, November 20, 2015

[GitHub] How to rebase your branch from upstream branch?

If you fork a copy (branch) from a repository and you want to rebase

https://help.github.com/articles/configuring-a-remote-for-a-fork/

https://help.github.com/articles/syncing-a-fork/

https://help.github.com/articles/configuring-a-remote-for-a-fork/

https://help.github.com/articles/syncing-a-fork/

- List the current configured remote repository for your fork.

git - Specify a new remote upstream repository that will be synced with the fork.

git - Verify the new upstream

repository you git

- Fetch the branches and their respective commits from the upstream repository. Commits to

masterwill be stored in a local branch,upstream/master.git fetch upstream # remote: Counting objects: 75, done. # remote: Compressing objects: 100% (53/53), done. # remote: Total 62 (delta 27), reused 44 (delta 9) # Unpacking objects: 100% (62/62), done. # From https://github.com/ORIGINAL_OWNER/ORIGINAL_REPOSITORY # * [new branch] master -> upstream/master

- Check out your fork's local

masterbranch.git checkout master # Switched to branch 'master'

- Merge the changes from

upstream/masterinto your localmasterbranch. This brings your fork'smasterbranch into sync with the upstream repository, without losing your local changes.git merge upstream/master # Updating a422352..5fdff0f # Fast-forward # README | 9 ------- # README.md | 7 ++++++ # 2 files changed, 7 insertions(+), 9 deletions(-) # delete mode 100644 README # create mode 100644 README.md

If your local branch didn't have any unique commits, Git will instead perform a "fast-forward":git merge upstream/master # Updating 34e91da..16c56ad # Fast-forward # README.md | 5 +++-- # 1 file changed, 3 insertions(+), 2 deletions(-)

Subscribe to:

Comments (Atom)