Linkerd2 Demo Case 介紹

步驟

###Step 1: Install the CLI###

curl -sL run.linkerd.io/install | sh

export PATH=$PATH:/home/liudanny/.linkerd2/bin

###Step 2: Validate your Kubernetes cluster###

linkerd check --pre

###Step 3: Install the control plane onto your cluster###

linkerd install | kubectl apply -f -

linkerd check

linkerd viz install | kubectl apply -f - # on-cluster metrics stack

###Step 4: Explore Linkerd

linkerd viz dashboard &

linkerd -n **linkerd-viz** viz top deploy/web

###Step 5: Install the demo app###

curl -sL <https://run.linkerd.io/emojivoto.yml> \\

| kubectl apply -f -

#add Linkerd to emojivoto by running

kubectl get -n emojivoto deploy -o yaml \\

| linkerd inject - \\

| kubectl apply -f -

linkerd -n emojivoto check --proxy

###Step 6: Watch it run###

linkerd -n emojivoto viz stat deploy

linkerd -n emojivoto viz top deploy

linkerd -n emojivoto viz tap deploy/web

###Step 7 (uninstall)###

kubectl get -n emojivoto deploy -o yaml \\

| linkerd uninject - \\

| kubectl apply -f -

curl -sL <https://run.linkerd.io/emojivoto.yml> \\

| kubectl delete -f -

linkerd viz uninstall | kubectl delete -f -

linkerd uninstall | kubectl delete -f -

實驗

###測試自己的namespace

$kubectl get -n default deploy -o yaml \\

| linkerd inject - \\

| kubectl apply -f -

$kubectl exec tiefighter -- curl -s -XPOST deathstar.default.svc.cluster.local/v1/request-landing

#uninject

$kubectl get -n default deploy -o yaml \\

| linkerd uninject - \\

| kubectl apply -f -

### not work!!!

$kubectl get -n default pod -o yaml \\

| linkerd inject - \\

| kubectl apply -f -

#uninject

$kubectl get -n default pod -o yaml \\

| linkerd uninject - \\

| kubectl apply -f -

心得

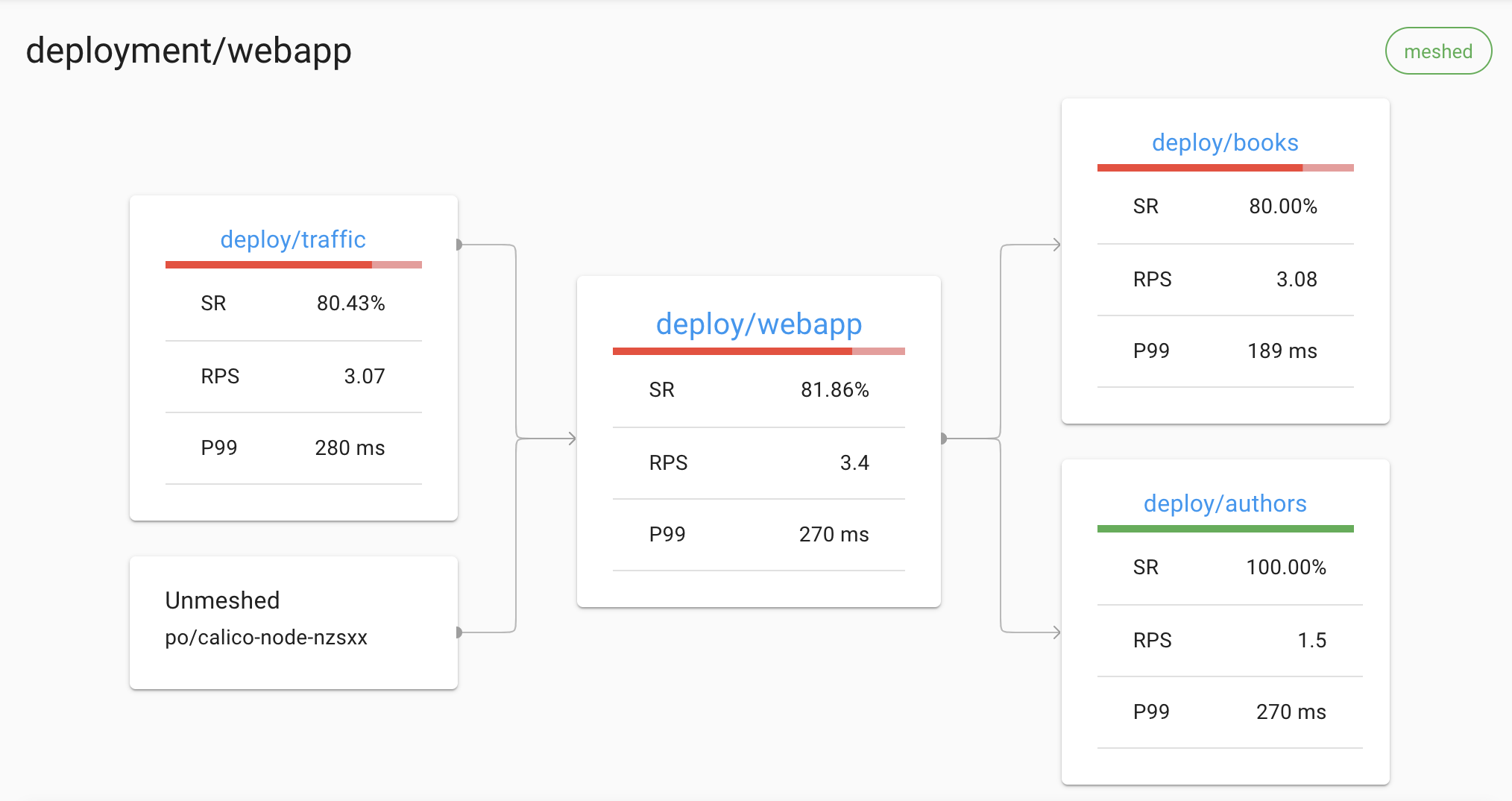

View the Linkerd dashboard and see all the services in the demo app. Since the demo app comes with a load generator, we can see live traffic metrics HTTP/2(gRPC)和HTTP/1(web frontend) by running: linkerd -n emojivoto viz stat deploy

This will show the “golden” metrics for each deployment:

- Success rates

- Request rates

- Latency distribution percentiles

Inject function is very convenient for users.

There is no service perspective for metrics, but deployment/Pods/... can kind of cover this.

相關執行結果參考

$ k get all -n emojivoto

NAME READY STATUS RESTARTS AGE

pod/emoji-696d9d8f95-p2xv5 2/2 Running 0 64m

pod/vote-bot-6d7677bb68-tmbfq 2/2 Running 0 64m

pod/voting-ff4c54b8d-whssp 2/2 Running 0 64m

pod/web-5f86686c4d-l8lcb 2/2 Running 0 64m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/emoji-svc ClusterIP 10.106.16.101 <none> 8080/TCP,8801/TCP 105m

service/voting-svc ClusterIP 10.109.94.225 <none> 8080/TCP,8801/TCP 105m

service/web-svc ClusterIP 10.100.247.154 <none> 80/TCP 105m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/emoji 1/1 1 1 105m

deployment.apps/vote-bot 1/1 1 1 105m

deployment.apps/voting 1/1 1 1 105m

deployment.apps/web 1/1 1 1 105m

NAME DESIRED CURRENT READY AGE

replicaset.apps/emoji-66ccdb4d86 0 0 0 105m

replicaset.apps/emoji-696d9d8f95 1 1 1 64m

replicaset.apps/vote-bot-69754c864f 0 0 0 105m

replicaset.apps/vote-bot-6d7677bb68 1 1 1 64m

replicaset.apps/voting-f999bd4d7 0 0 0 105m

replicaset.apps/voting-ff4c54b8d 1 1 1 64m

replicaset.apps/web-5f86686c4d 1 1 1 64m

replicaset.apps/web-79469b946f 0 0 0 105m

$ linkerd -n emojivoto viz stat deploy

NAME MESHED SUCCESS RPS LATENCY_P50 LATENCY_P95 LATENCY_P99 TCP_CONN

emoji 1/1 100.00% 2.3rps 1ms 1ms 1ms 3

vote-bot 1/1 100.00% 0.3rps 1ms 1ms 1ms 1

voting 1/1 87.01% 1.3rps 1ms 1ms 2ms 3

web 1/1 91.91% 2.3rps 2ms 16ms 19ms 3

$ linkerd -n emojivoto viz top deploy

(press q to quit)

(press a/LeftArrowKey to scroll left, d/RightArrowKey to scroll right)

Source Destination Method Path Count Best Worst Last Success Rate

web-5f86686c4d-l8lcb emoji-696d9d8f95-p2xv5 POST /emojivoto.v1.EmojiService/ListAll 42 562µs 5ms 937µs 100.00%

vote-bot-6d7677bb68-tmbfq web-5f86686c4d-l8lcb GET /api/list 42 3ms 13ms 9ms 100.00%

web-5f86686c4d-l8lcb emoji-696d9d8f95-p2xv5 POST /emojivoto.v1.EmojiService/FindByShortcode 42 553µs 13ms 2ms 100.00%

vote-bot-6d7677bb68-tmbfq web-5f86686c4d-l8lcb GET /api/vote 41 5ms 21ms 6ms 87.80%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteDoughnut 6 926µs 3ms 2ms 0.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteMrsClaus 4 1ms 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteRocket 2 2ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VotePointUp2 2 2ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteCrossedSwords 2 2ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteWorldMap 2 739µs 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteDog 2 2ms 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteOkWoman 2 951µs 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteNerdFace 2 2ms 9ms 9ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteConstructionWorkerMan 2 1ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteCheckeredFlag 2 863µs 3ms 863µs 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/Vote100 2 894µs 2ms 894µs 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VotePizza 2 1ms 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteJackOLantern 2 884µs 3ms 3ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteManDancing 2 761µs 1ms 1ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteBeachUmbrella 2 1ms 2ms 1ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteNoGoodWoman 2 1ms 2ms 2ms 100.00%

web-5f86686c4d-l8lcb voting-ff4c54b8d-whssp POST /emojivoto.v1.VotingService/VoteGuardsman 2 968µs 2ms 2ms 100.00%

**$ linkerd -n emojivoto viz tap deploy/web**

req id=11:0 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/list

req id=11:1 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/ListAll

rsp id=11:1 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1792µs

end id=11:1 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=193µs response-length=2140B

rsp id=11:0 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=4294µs

end id=11:0 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=358µs response-length=4513B

req id=11:2 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/vote

req id=11:3 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/FindByShortcode

rsp id=11:3 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1657µs

end id=11:3 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=175µs response-length=25B

req id=11:4 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :method=POST :authority=voting-svc.emojivoto:8080 :path=/emojivoto.v1.VotingService/VoteDoughnut

rsp id=11:4 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :status=200 latency=2217µs

end id=11:4 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true grpc-status=Unknown duration=161µs response-length=0B

rsp id=11:2 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=500 latency=8272µs

end id=11:2 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=171µs response-length=51B

req id=11:5 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/list

req id=11:6 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/ListAll

rsp id=11:6 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1483µs

end id=11:6 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=145µs response-length=2140B

rsp id=11:5 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=5621µs

end id=11:5 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=336µs response-length=4513B

req id=11:7 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/vote

req id=11:8 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/FindByShortcode

rsp id=11:8 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1477µs

end id=11:8 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=206µs response-length=28B

req id=11:9 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :method=POST :authority=voting-svc.emojivoto:8080 :path=/emojivoto.v1.VotingService/VoteManDancing

rsp id=11:9 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true :status=200 latency=1560µs

end id=11:9 proxy=out src=10.244.109.115:33470 dst=10.244.109.84:8080 tls=true grpc-status=OK duration=144µs response-length=5B

rsp id=11:7 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=7140µs

end id=11:7 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=96µs response-length=0B

req id=11:10 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/list

req id=11:11 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :method=POST :authority=emoji-svc.emojivoto:8080 :path=/emojivoto.v1.EmojiService/ListAll

rsp id=11:11 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true :status=200 latency=1855µs

end id=11:11 proxy=out src=10.244.109.115:55194 dst=10.244.109.109:8080 tls=true grpc-status=OK duration=375µs response-length=2140B

rsp id=11:10 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :status=200 latency=3786µs

end id=11:10 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true duration=339µs response-length=4513B

req id=11:12 proxy=in src=10.244.109.92:46446 dst=10.244.109.115:8080 tls=true :method=GET :authority=web-svc.emojivoto:80 :path=/api/vote