I have intrudced

ZeroMQ as very powerful tool to leverage your application to become a distrubuted system. If you see

http://zguide.zeromq.org/page:all and take a look at the content, you will realize there are a lot of stuff that needs to study in details. Due to this reason, I will summarize what I have studied in ZeroMQ and let me give some notes about the important concepts.

Request-Reply pattern:

The REQ-REP socket pair is lockstep. The client does

zmq_msg_send(3) and then

zmq_msg_recv(3), in a loop.

They create a ØMQ context to work with, and a socket.

If you kill the server (Ctrl-C) and restart it, the client won't recover properly.

Take care of string in C:

When

you receive string data from ØMQ, in C, you simply cannot trust that

it's safely terminated. Every single time you read a string you should

allocate a new buffer with space for an extra byte, copy the string, and

terminate it properly with a null.

So let's establish the rule that ØMQ strings are length-specified, and are sent on the wire without a trailing null.

Version Reporting:

int major, minor, patch;

zmq_version (&major, &minor, &patch);

printf ("Current 0MQ version is %d.%d.%d\n", major, minor, patch);

Publish-Subscribe Pattern:

- A

subscriber can connect to more than one publisher, using one 'connect'

call each time. Data will then arrive and be interleaved ("fair-queued")

so that no single publisher drowns out the others.

- If a publisher has no connected subscribers, then it will simply drop all messages.

- If

you're using TCP, and a subscriber is slow, messages will queue up on

the publisher. We'll look at how to protect publishers against this,

using the "high-water mark" later.

- In the current

versions of ØMQ, filtering happens at the subscriber side, not the

publisher side. This means, over TCP, that a publisher will send all

messages to all subscribers, which will then drop messages they don't

want.

Note that when you use a SUB socket you

must set a subscription using

zmq_setsockopt(3) and SUBSCRIBE, as in this code.

The PUB-SUB socket pair is asynchronous. The client does

zmq_msg_recv(3), in a loop.

the subscriber will always miss the first messages that the publisher sends because

as the subscriber connects to the publisher (something that takes a

small but non-zero time), the publisher may already be sending messages

out.

1. In Chapter Two I'll explain how to synchronize a

publisher and subscribers so that you don't start to publish data until

the subscriber(s) really are connected and ready.

2. The

alternative to synchronization is to simply assume that the published

data stream is infinite and has no start, and no end. This is how we

built our weather client example.

You can stop and restart the server as often as you like, and the client will keep working.

Divide and Conquer

We have to synchronize the start of the batch with all workers being up and running.

If you don't synchronize the start of the batch somehow, the system won't run in parallel at all.

The ventilator's PUSH socket distributes tasks to workers evenly. This is called load-balancing and it's something we'll look at again in more detail.

The sink's PULL socket collects results from workers evenly. This is called fair-queuing.

Programming with 0MQ

Sockets

are not threadsafe. It became legal behavior to migrate sockets from

one thread to another in ØMQ/2.1, but this remains dangerous unless you

use a "full memory barrier".

it's the

zmq_ctx_new(3) call. You should create and use exactly one context in your process.

If you're using the fork() system call, each process needs its own context.

- If you are opening and closing a lot of sockets, that's probably a sign you need to redesign your application.

- When you exit the program, close your sockets and then call zmq_ctx_destroy(3). This destroys the context.

First, do not try to use the same socket from multiple threads.

Next, you need to shut down each socket that has ongoing requests.

Finally, destroy the context. Catch that error, and then set linger on, and close sockets in that thread, and exit. Do not destroy the same context twice.

Brokers

are an excellent thing in reducing the complexity of large networks.

But adding broker-based messaging to a product like Zookeeper would make

it worse, not better. It would mean adding an additional big box, and a

new single point of failure. A broker rapidly becomes a bottleneck and a

new risk to manage.

And this is ØMQ: an

efficient, embeddable library that solves most of the problems an

application needs to become nicely elastic across a network, without

much cost.

- It handles I/O asynchronously, in

background threads. These communicate with application threads using

lock-free data structures, so concurrent ØMQ applications need no locks,

semaphores, or other wait states.

- Components can come

and go dynamically and ØMQ will automatically reconnect. This means you

can start components in any order. You can create "service-oriented

architectures" (SOAs) where services can join and leave the network at

any time.

- It queues messages automatically when needed.

It does this intelligently, pushing messages as close as possible to

the receiver before queuing them.

- It has ways of

dealing with over-full queues (called "high water mark"). When a queue

is full, ØMQ automatically blocks senders, or throws away messages,

depending on the kind of messaging you are doing (the so-called

"pattern").

- It lets your applications talk to each

other over arbitrary transports: TCP, multicast, in-process,

inter-process. You don't need to change your code to use a different

transport.

- It handles slow/blocked readers safely, using different strategies that depend on the messaging pattern.

- It

lets you route messages using a variety of patterns such as

request-reply and publish-subscribe. These patterns are how you create

the topology, the structure of your network.

- It lets

you create proxies to queue, forward, or capture messages with a single

call. Proxies can reduce the interconnection complexity of a network.

- It

delivers whole messages exactly as they were sent, using a simple

framing on the wire. If you write a 10k message, you will receive a 10k

message.

- It does not impose any format on messages.

They are blobs of zero to gigabytes large. When you want to represent

data you choose some other product on top, such as Google's protocol

buffers, XDR, and others.

- It handles network errors intelligently. Sometimes it retries, sometimes it tells you an operation failed.

- It

reduces your carbon footprint. Doing more with less CPU means your

boxes use less power, and you can keep your old boxes in use for longer.

Al Gore would love ØMQ.

Missing Message Problem Solver

The

main change in 3.x is that PUB-SUB works properly, as in, the publisher

only sends subscribers stuff they actually want. In 2.x, publishers

send everything and the subscribers filter.

Most of the API is

backwards compatible, except a few blockheaded changes that went into

3.0 with no real regard to the cost of breaking existing code. The

syntax of

zmq_send(3) and

zmq_recv(3) changed, and ZMQ_NOBLOCK got rebaptised to ZMQ_DONTWAIT.

So

the minimal change for C/C++ apps that use the low-level libzmq API is

to replace all calls to zmq_send with zmq_msg_send, and zmq_recv with

zmq_msg_recv.

Pluging Sockets into the Topology

So mostly it's obvious which node should be doing

zmq_bind(3) (the server) and which should be doing

zmq_connect(3) (the client).

A

server node can bind to many endpoints and it can do this using a

single socket. This means it will accept connections across different

transports:

zmq_bind (socket,

"tcp://*:5555");

zmq_bind (socket,

"tcp://*:9999");

zmq_bind (socket,

"ipc://myserver.ipc");

Using Sockets to Carry Data

Let's look at the main differences between TCP sockets and ØMQ sockets when it comes to carrying data:

- ØMQ

sockets carry messages, rather than bytes (as in TCP) or frames (as in

UDP). A message is a length-specified blob of binary data. We'll come to

messages shortly, their design is optimized for performance and thus

somewhat tricky to understand.

- ØMQ sockets do their I/O

in a background thread. This means that messages arrive in a local

input queue, and are sent from a local output queue, no matter what your

application is busy doing. These are configurable memory queues, by the

way.

- ØMQ sockets can, depending on the socket type, be

connected to (or from, it's the same) many other sockets. Where TCP

emulates a one-to-one phone call,

ØMQ implements one-to-many (like a radio broadcast), many-to-many (like

a post office), many-to-one (like a mail box), and even one-to-one.

- ØMQ sockets can send to many endpoints (creating a fan-out model), or receive from many endpoints (creating a fan-in model)..

Unicast Transports

ØMQ provides a set of unicast transports (inproc, ipc, and tcp) and multicast transports (epgm, pgm). The inter-process transport, ipc, is like tcp except that it is abstracted from the LAN, so you don't need to specify IP addresses or domain names.

It has one limitation: it does not work on Windows. This may be fixed in future versions of ØMQ.

The inter-thread transport, inproc, is a connected signaling transport. It is much faster than tcp or ipc. This transport has a specific limitation compared to ipc and tcp: you must do bind before connect.

ØMQ is not a neutral carrier

it imposes a framing on the transport protocols it uses. This framing

is not compatible with existing protocols, which tend to use their own

framing.

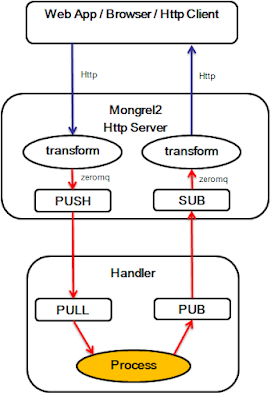

You need to implement whatever protocol you want to

speak in any case, but you can connect that protocol server (which can

be extremely thin) to a ØMQ backend that does the real work. The

beautiful part here is that you can then extend your backend with code

in any language, running locally or remotely, as you wish. Zed Shaw's

Mongrel2 web server is a great example of such an architecture.

I/O Threads

We said that ØMQ does I/O in a background thread. When you create a new context it starts with one I/O thread.

int io_threads = 4;

void *context = zmq_ctx_new ();

zmq_ctx_set (context, ZMQ_IO_THREADS, io_threads);

assert (zmq_ctx_get (context, ZMQ_IO_THREADS) == io_threads);

Limiting Socket Use

By

default, a ØMQ socket will continue to accept connections until your

operating system runs out of file handles. You can set a limit using

another

zmq_ctx_set(3) call:

int max_sockets = 1024;

void *context = zmq_ctx_new ();

zmq_ctx_get (context, ZMQ_MAX_SOCKETS, max_sockets);

assert (zmq_ctx_get (context, ZMQ_MAX_SOCKETS) == max_sockets);

Core Messaging Patterns

- Request-reply, which connects a set of clients to a set of services. This is a remote procedure call and task distribution pattern.

- Publish-subscribe, which connects a set of publishers to a set of subscribers. This is a data distribution pattern.

- Pipeline,

connects nodes in a fan-out / fan-in pattern that can have multiple

steps, and loops. This is a parallel task distribution and collection

pattern.

- Exclusive pair, which

connects two sockets in an exclusive pair. This is a pattern you should

use only to connect two threads in a process. We'll see an example at

the end of this chapter.

The

zmq_socket(3)

man page is fairly clear about the patterns, it's worth reading several

times until it starts to make sense. These are the socket combinations

that are valid for a connect-bind pair (either side can bind):

- PUB and SUB

- REQ and REP

- REQ and ROUTER

- DEALER and REP

- DEALER and ROUTER

- DEALER and DEALER

- ROUTER and ROUTER

- PUSH and PULL

- PAIR and PAIR

Working with Messages

In memory, ØMQ messages are zmq_msg_t structures

Note

than when you have passed a message to zmq_msg_send(3), ØMQ will clear

the message, i.e. set the size to zero. You cannot send the same message

twice, and you cannot access the message data after sending it.

If you want to send the same message more than once, create a second message, initialize it using

zmq_msg_init(3) and then use

zmq_msg_copy(3) to create a copy of the first message.

ØMQ also supports multi-part messages, which let you send or receive a list of frames as a single on-the-wire message.

Handle Multiple Sockets

To actually read from multiple sockets at once, use zmq-poll[3].

Multi-part Messages

zmq-msg-send (socket, &message, ZMQ-SNDMORE);

…

zmq-msg-send (socket, &message, ZMQ-SNDMORE);

…

zmq-msg-send (socket, &message, 0);

while (1) {

zmq-msg-t message;

zmq-msg-init (&message);

zmq-msg-recv (socket, &message, 0);

//Process the message frame

zmq-msg-close (&message);

int-t more;

size-t more-size = sizeof (more);

zmq-getsockopt (socket, ZMQ-RCVMORE, &more, &more-size);

if (!more)

break;//Last message frame

}

If you are using zmq-poll[3], when you receive the first part of a message, all the rest has also arrived.

Intermediaries and Proxies

The

messaging industry calls this "intermediation", meaning that the stuff

in the middle deals with either side. In ØMQ we call these proxies,

queues, forwarders, device, or brokers, depending on the context.

In classic messaging, this is the job of the "message broker". ØMQ doesn't come with a message broker as such, but it lets us build intermediaries quite easily.

Pub-Sub Network with a Proxy ==>

he

best analogy is an HTTP proxy; it's there but doesn't have any special

role. Adding a pub-sub proxy solves the dynamic discovery problem in our

example.